I’ve been playing with XML & XSLT on and off for 6 years. I’ve toyed with parser scripts. I think, at some point, I even wasted a few days making an ant compiler to generate a static site for a client of mine… but that’s as far as it went.

Recently, I decided to play again and set up an experimental playground over at ethernick.com. What’s fun about it ( “there’s no place like 127.0.0.1” kinda fun ), is that it’s all XML. Right now, there isn’t a single html page. I’ve parsed out RSS & RDF, plus i’ve even applied XSLT to a quasi-HTML index page. But don’t let the letters fool you, it’s not HTML.

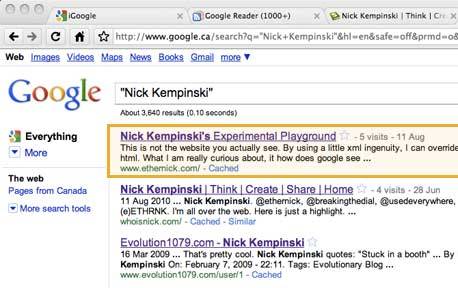

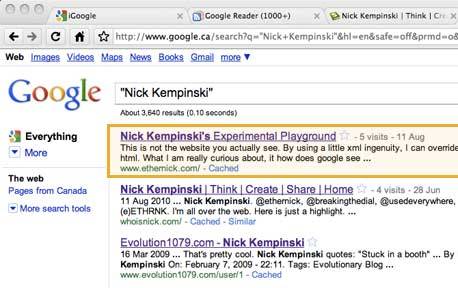

What caught my eye, was that although the very first page is “.xml” and it uses XML doctypes and XSLT calls, Google decided to index it as if it where HTML. So I added a fake

What I ended up thinking is – if Google sees something else, is this a potential hole for scamming? Here’s the story I play out in my mind:

Say I work for a seedy business. And at that business my job is to lure unsuspecting people to see my site. These unsuspecting people may be even be fooled to click on a link or two. And while people are clicking away to unauthorized sites and scammy links, Google doesn’t see any of it. They are unsuspecting, because to them, it’s a little page with a couple of paragraphs.

Now…I admit this isn’t fully thought out. I admit that there are holes. I’m wondering, in real world practicality, why would someone make a page for real-estate, and then show them porn. It’s more of a surprise factor than anything. So, is it really a problem after all?

I don’t know. But I guess that’s why it’s an experiment. I guess the discussion is open.